Practice Exam 2

Google Cloud Platform (GCP) Associate Cloud Engineer (ACE) certification study notes, this guide will help you with quick revision before the exam. it can use as study notes for your preparation.

Dashboard Other Certification NotesPractice Exam 2

Click on the Answer button for the correct answer and its explanation.

If this practice exam has been helpful to you please share it with others.

- You host a static website on Cloud Storage. Recently, you began to include links to PDF files on this site. Currently, when users click on the links to these PDF files, their browsers prompt them to save the file onto their local system. Instead, you want the clicked PDF files to be displayed within the browser window directly, without prompting the user to save the file locally. What should you do?

- A. Enable Cloud CDN on the website frontend.

- B. Enable ‘Share publicly’ on the PDF file objects.

- C. Set Content-Type metadata to application/pdf on the PDF file objects.

- D. Add a label to the storage bucket with a key of Content-Type and value of application/pdf.

Answer

Correct answer: C

- You have an application that looks for its licensing server on the IP 10.0.3.21. You need to deploy the licensing server on Compute Engine. You do not want to change the configuration of the application and want the application to be able to reach the licensing server. What should you do?

- A. Reserve the IP 10.0.3.21 as a static internal IP address using gcloud and assign it to the licensing server.

- B. Reserve the IP 10.0.3.21 as a static public IP address using gcloud and assign it to the licensing server.

- C. Use the IP 10.0.3.21 as a custom ephemeral IP address and assign it to the licensing server.

- D. Start the licensing server with an automatic ephemeral IP address, and then promote it to a static internal IP address.

Answer

Correct answer: A

- You are deploying an application to App Engine. You want the number of instances to scale based on request rate. You need at least 3 unoccupied instances at all times. Which scaling type should you use?

- A. Manual Scaling with 3 instances.

- B. Basic Scaling with min_instances set to 3.

- C. Basic Scaling with max_instances set to 3.

- D. Automatic Scaling with min_idle_instances set to 3.

Answer

Correct answer: D

- You are the project owner of a GCP project and want to delegate control to colleagues to manage buckets and files in Cloud Storage. You want to follow Google-recommended practices. Which IAM roles should you grant your colleagues?

- A. Project Editor.

- B. Storage Admin.

- C. Storage Object Admin.

- D. Storage Object Creator.

Answer

Correct answer: B

- You need to update a deployment in Deployment Manager without any resource downtime in the deployment. Which command should you use?

- A. gcloud deployment-manager deployments create –config

. - B. gcloud deployment-manager deployments update –config

. - C. gcloud deployment-manager resources create –config

. - D. gcloud deployment-manager resources update –config

.

Answer

Correct answer: B

- A. gcloud deployment-manager deployments create –config

- You are running an application on multiple virtual machines within a Managed Instance Group and have autoscaling enabled. The autoscaling policy is configured so that additional instances are added to the group if the CPU utilization of instances goes above 80%. VMs are added until the instance group reaches its maximum limit of five VMs or until CPU utilization of instances lowers to 80%. The initial delay for HTTP health checks against the instances is set to 30 seconds. The virtual machine instances take around three minutes to become available for users. You observe that when the instance group autoscales, it adds more instances then necessary to support the levels of end-user traffic. You want to properly maintain instance group sizes when autoscaling. What should you do?

- A. Set the maximum number of instances to 1.

- B. Decrease the maximum number of instances to 3.

- C. Use a TCP health check instead of an HTTP health check.

- D. Increase the initial delay of the HTTP health check to 200 seconds.

Answer

Correct answer: D

- You have 32 GB of data in a single file that you need to upload to a Nearline Storage bucket. The WAN connection you are using is rated at 1 Gbps, and you are the only one on the connection. You want to use as much of the rated 1 Gbps as possible to transfer the file rapidly. How should you upload the file?

- A. Use the GCP Console to transfer the file instead of gsutil.

- B. Enable parallel composite uploads using gsutil on the file transfer.

- C. Decrease the TCP window size on the machine initiating the transfer.

- D. Change the storage class of the bucket from Nearline to Multi-Regional.

Answer

Correct answer: B

- You deployed an App Engine application using gcloud app deploy, but it did not deploy to the intended project. You want to find out why this happened and where the application deployed. What should you do?

- A. Check the app.yaml file for your application and check project settings.

- B. Check the web-application.xml file for your application and check project settings.

- C. Go to Deployment Manager and review settings for deployment of applications.

- D. Go to Cloud Shell and run gcloud config list to review the Google Cloud configuration used for deployment.

Answer

Correct answer: D

- You want to verify the IAM users and roles assigned within a GCP project named my-project. What should you do?

- A. Run gcloud iam roles list. Review the output section.

- B. Run gcloud iam service-accounts list. Review the output section.

- C. Navigate to the project and then to the IAM section in the GCP Console. Review the members and roles.

- D. Navigate to the project and then to the Roles section in the GCP Console. Review the roles and status.

Answer

Correct answer: C

- You need to select and configure compute resources for a set of batch processing jobs. These jobs take around 2 hours to complete and are run nightly. You want to minimize service costs. What should you do?

- A. Select Google Kubernetes Engine. Use a single-node cluster with a small instance type.

- B. Select Google Kubernetes Engine. Use a three-node cluster with micro instance types.

- C. Select Compute Engine. Use preemptible VM instances of the appropriate standard machine type.

- D. Select Compute Engine. Use VM instance types that support micro bursting.

Answer

Correct answer: C

- You want to select and configure a cost-effective solution for relational data on Google Cloud Platform. You are working with a small set of operational data in one geographic location. You need to support point-in-time recovery. What should you do?

- A. Select Cloud SQL (MySQL). Verify that the enable binary logging option is selected.

- B. Select Cloud SQL (MySQL). Select the create failover replicas option.

- C. Select Cloud Spanner. Set up your instance with 2 nodes.

- D. Select Cloud Spanner. Set up your instance as multi-regional.

Answer

Correct answer: A

- You are hosting an application on bare-metal servers in your own data center. The application needs access to Cloud Storage. However, security policies prevent the servers hosting the application from having public IP addresses or access to the internet. You want to follow Google-recommended practices to provide the application with access to Cloud Storage. What should you do?

- A. 1. Use nslookup to get the IP address for storage.googleapis.com. 2. Negotiate with the security team to be able to give a public IP address to the servers. 3. Only allow egress traffic from those servers to the IP addresses for storage.googleapis.com.

- B. 1. Using Cloud VPN, create a VPN tunnel to a Virtual Private Cloud (VPC) in Google Cloud. 2. In this VPC, create a Compute Engine instance and install the Squid proxy server on this instance. 3. Configure your servers to use that instance as a proxy to access Cloud Storage.

- C. 1. Use Migrate for Compute Engine (formerly known as Velostrata) to migrate those servers to Compute Engine. 2. Create an internal load balancer (ILB) that uses storage.googleapis.com as backend. 3. Configure your new instances to use this ILB as proxy.

- D. 1. Using Cloud VPN or Interconnect, create a tunnel to a VPC in Google Cloud. 2. Use Cloud Router to create a custom route advertisement for 199.36.153.4/30. Announce that network to your on-premises network through the VPN tunnel. 3. In your on-premises network, configure your DNS server to resolve *.googleapis.com as a CNAME to restricted.googleapis.com.

Answer

Correct answer: D

- Your company has a Google Cloud Platform project that uses BigQuery for data warehousing. Your data science team changes frequently and has few members. You need to allow members of this team to perform queries. You want to follow Google-recommended practices. What should you do?

- A. 1. Create an IAM entry for each data scientist’s user account. 2. Assign the BigQuery jobUser role to the group.

- B. 1. Create an IAM entry for each data scientist’s user account. 2. Assign the BigQuery dataViewer user role to the group.

- C. 1. Create a dedicated Google group in Cloud Identity. 2. Add each data scientist’s user account to the group. 3. Assign the BigQuery jobUser role to the group.

- D. 1. Create a dedicated Google group in Cloud Identity. 2. Add each data scientist’s user account to the group. 3. Assign the BigQuery dataViewer user role to the group.

Answer

Correct answer: C

- You are given a project with a single Virtual Private Cloud (VPC) and a single subnetwork in the us-central1 region. There is a Compute Engine instance hosting an application in this subnetwork. You need to deploy a new instance in the same project in the europe-west1 region. This new instance needs access to the application. You want to follow Google-recommended practices. What should you do?

- A. 1. Create a subnetwork in the same VPC, in europe-west1. 2. Create the new instance in the new subnetwork and use the first instance’s private address as the endpoint.

- B. 1. Create a VPC and a subnetwork in europe-west1. 2. Expose the application with an internal load balancer. 3. Create the new instance in the new subnetwork and use the load balancer’s address as the endpoint.

- C. 1. Create a subnetwork in the same VPC, in europe-west1. 2. Use Cloud VPN to connect the two subnetworks. 3. Create the new instance in the new subnetwork and use the first instance’s private address as the endpoint.

- D. 1. Create a VPC and a subnetwork in europe-west1. 2. Peer the 2 VPCs. 3. Create the new instance in the new subnetwork and use the first instance’s private address as the endpoint.

Answer

Correct answer: A

-

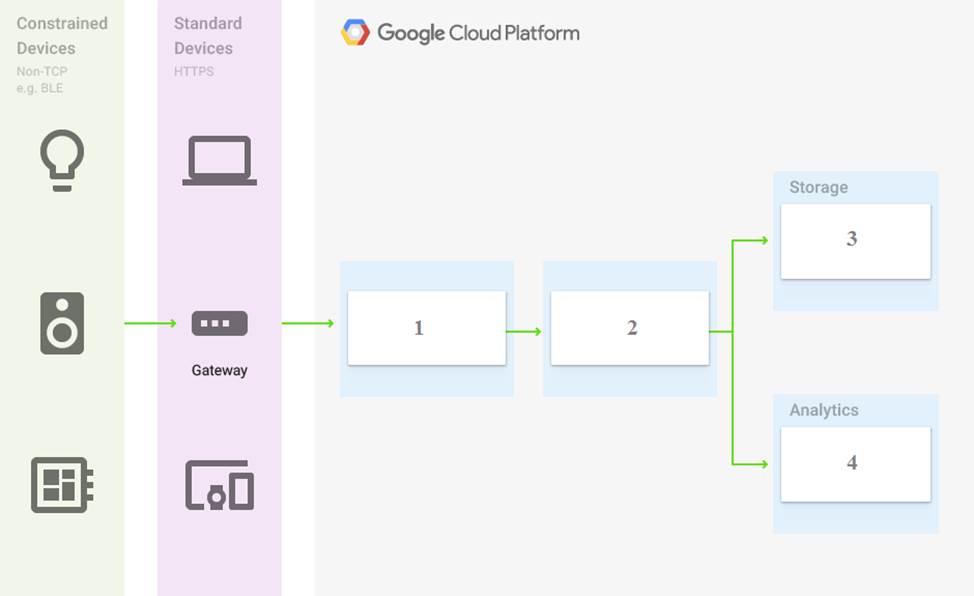

You are building a pipeline to process time-series data. Which Google Cloud Platform services should you put in boxes 1,2,3, and 4?

- A. Cloud Pub/Sub, Cloud Dataflow, Cloud Datastore, BigQuery.

- B. Firebase Messages, Cloud Pub/Sub, Cloud Spanner, BigQuery.

- C. Cloud Pub/Sub, Cloud Storage, BigQuery, Cloud Bigtable.

- D. Cloud Pub/Sub, Cloud Dataflow, Cloud Bigtable, BigQuery.

Answer

Correct answer: D

- For analysis purposes, you need to send all the logs from all of your Compute Engine instances to a BigQuery dataset called platform-logs. You have already installed the Cloud Logging agent on all the instances. You want to minimize cost. What should you do?

- A. 1. Give the BigQuery Data Editor role on the platform-logs dataset to the service accounts used by your instances. 2. Update your instances’ metadata to add the following value: logs-destination: bq://platform-logs.

- B. 1. In Cloud Logging, create a logs export with a Cloud Pub/Sub topic called logs as a sink. 2. Create a Cloud Function that is triggered by messages in the logs topic. 3. Configure that Cloud Function to drop logs that are not from Compute Engine and to insert Compute Engine logs in the platform-logs dataset.

- C. 1. In Cloud Logging, create a filter to view only Compute Engine logs. 2. Click Create Export. 3. Choose BigQuery as Sink Service, and the platform-logs dataset as Sink Destination.

- D. 1. Create a Cloud Function that has the BigQuery User role on the platform-logs dataset. 2. Configure this Cloud Function to create a BigQuery Job that executes this query: INSERT INTO dataset.platform-logs (timestamp, log) SELECT timestamp, log FROM compute.logs WHERE timestamp > DATE_SUB(CURRENT_DATE(), INTERVAL 1 DAY) 3. Use Cloud Scheduler to trigger this Cloud Function once a day.

Answer

Correct answer: C

- You want to deploy an application on Cloud Run that processes messages from a Cloud Pub/Sub topic. You want to follow Google-recommended practices. What should you do?

- A. 1. Create a Cloud Function that uses a Cloud Pub/Sub trigger on that topic. 2. Call your application on Cloud Run from the Cloud Function for every message.

- B. 1. Grant the Pub/Sub Subscriber role to the service account used by Cloud Run. 2. Create a Cloud Pub/Sub subscription for that topic. 3. Make your application pull messages from that subscription.

- C. 1. Create a service account. 2. Give the Cloud Run Invoker role to that service account for your Cloud Run application. 3. Create a Cloud Pub/Sub subscription that uses that service account and uses your Cloud Run application as the push endpoint.

- D. 1. Deploy your application on Cloud Run on GKE with the connectivity set to Internal. 2. Create a Cloud Pub/Sub subscription for that topic. 3. In the same Google Kubernetes Engine cluster as your application, deploy a container that takes the messages and sends them to your application.

Answer

Correct answer: C

- Your projects incurred more costs than you expected last month. Your research reveals that a development GKE container emitted a huge number of logs, which resulted in higher costs. You want to disable the logs quickly using the minimum number of steps. What should you do?

- A. 1. Go to the Logs ingestion window in Stackdriver Logging, and disable the log source for the GKE container resource.

- B. 1. Go to the Logs ingestion window in Stackdriver Logging, and disable the log source for the GKE Cluster Operations resource.

- C. 1. Go to the GKE console, and delete existing clusters. 2. Recreate a new cluster. 3. Clear the option to enable legacy Stackdriver Logging.

- D. 1. Go to the GKE console, and delete existing clusters. 2. Recreate a new cluster. 3. Clear the option to enable legacy Stackdriver Monitoring.

Answer

Correct answer: A

-

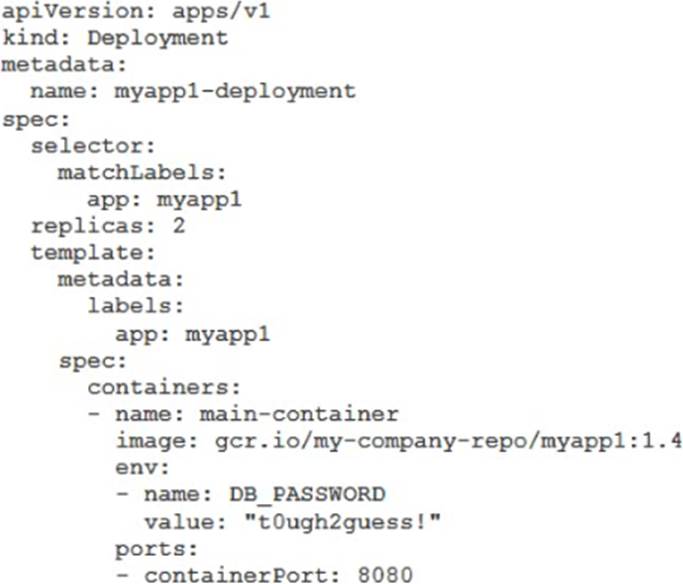

You’ve deployed a microservice called myapp1 to a Google Kubernetes Engine cluster using the YAML file specified below. You need to refactor this configuration so that the database password is not stored in plain text. You want to follow Google-recommended practices. What should you do?

- A. Store the database password inside the Docker image of the container, not in the YAML file.

- B. Store the database password inside a Secret object. Modify the YAML file to populate the DB_PASSWORD environment variable from the Secret.

- C. Store the database password inside a ConfigMap object. Modify the YAML file to populate the DB_PASSWORD environment variable from the ConfigMap.

- D. Store the database password in a file inside a Kubernetes persistent volume, and use a persistent volume claim to mount the volume to the container.

Answer

Correct answer: B

- You have an application running in Google Kubernetes Engine (GKE) with cluster autoscaling enabled. The application exposes a TCP endpoint. There are several replicas of this application. You have a Compute Engine instance in the same region, but in another Virtual Private Cloud (VPC), called gce-network, that has no overlapping IP ranges with the first VPC. This instance needs to connect to the application on GKE. You want to minimize effort. What should you do?

- A. 1. In GKE, create a Service of type LoadBalancer that uses the application’s Pods as backend. 2. Set the service’s externalTrafficPolicy to Cluster. 3. Configure the Compute Engine instance to use the address of the load balancer that has been created.

- B. 1. In GKE, create a Service of type NodePort that uses the application’s Pods as backend. 2. Create a Compute Engine instance called proxy with 2 network interfaces, one in each VPC. 3. Use iptables on this instance to forward traffic from gce-network to the GKE nodes. 4. Configure the Compute Engine instance to use the address of proxy in gce-network as endpoint.

- C. 1. In GKE, create a Service of type LoadBalancer that uses the application’s Pods as backend. 2. Add an annotation to this service: cloud.google.com/load-balancer-type: Internal 3. Peer the two VPCs together. 4. Configure the Compute Engine instance to use the address of the load balancer that has been created.

- D. 1. In GKE, create a Service of type LoadBalancer that uses the application’s Pods as backend. 2. Add a Cloud Armor Security Policy to the load balancer that whitelists the internal IPs of the MIG’s instances. 3. Configure the Compute Engine instance to use the address of the load balancer that has been created.

Answer

Correct answer: A

- You are using Container Registry to centrally store your company’s container images in a separate project. In another project, you want to create a Google Kubernetes Engine (GKE) cluster. You want to ensure that Kubernetes can download images from Container Registry. What should you do?

- A. In the project where the images are stored, grant the Storage Object Viewer IAM role to the service account used by the Kubernetes nodes.

- B. When you create the GKE cluster, choose the Allow full access to all Cloud APIs option under ‘Access scopes’.

- C. Create a service account, and give it access to Cloud Storage. Create a P12 key for this service account and use it as an imagePullSecrets in Kubernetes.

- D. Configure the ACLs on each image in Cloud Storage to give read-only access to the default Compute Engine service account.

Answer

Correct answer: A

-

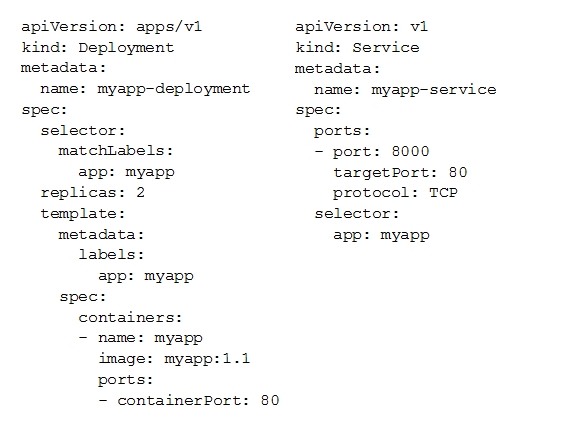

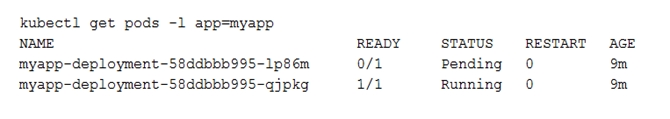

You deployed a new application inside your Google Kubernetes Engine cluster using the YAML file specified below. You check the status of the deployed pods and notice that one of them is still in PENDING status. You want to find out why the pod is stuck in pending status. What should you do?

- A. Review details of the myapp-service Service object and check for error messages.

- B. Review details of the myapp-deployment Deployment object and check for error messages.

- C. Review details of myapp-deployment-58ddbbb995-lp86m Pod and check for warning messages.

- D. View logs of the container in myapp-deployment-58ddbbb995-lp86m pod and check for warning messages.

Answer

Correct answer: C

- You are setting up a Windows VM on Compute Engine and want to make sure you can log in to the VM via RDP. What should you do?

- A. After the VM has been created, use your Google Account credentials to log in into the VM.

- B. After the VM has been created, use gcloud compute reset-windows-password to retrieve the login credentials for the VM.

- C. When creating the VM, add metadata to the instance using ‘windows-password’ as the key and a password as the value.

- D. After the VM has been created, download the JSON Private Key for the default Compute Engine service account. Use the credentials in the JSON file to log in to the VM.

Answer

Correct answer: B

- You want to configure an SSH connection to a single Compute Engine instance for users in the dev1 group. This instance is the only resource in this particular Google Cloud Platform project that the dev1 users should be able to connect to. What should you do?

- A. Set metadata to enable-oslogin=true for the instance. Grant the dev1 group the compute.osLogin role. Direct them to use the Cloud Shell to ssh to that instance.

- B. Set metadata to enable-oslogin=true for the instance. Set the service account to no service account for that instance. Direct them to use the Cloud Shell to ssh to that instance.

- C. Enable block project wide keys for the instance. Generate an SSH key for each user in the dev1 group. Distribute the keys to dev1 users and direct them to use their third-party tools to connect.

- D. Enable block project wide keys for the instance. Generate an SSH key and associate the key with that instance. Distribute the key to dev1 users and direct them to use their third-party tools to connect.

Answer

Correct answer: A

- You need to produce a list of the enabled Google Cloud Platform APIs for a GCP project using the gcloud command line in the Cloud Shell. The project name is my-project. What should you do?

- A. Run gcloud projects list to get the project ID, and then run gcloud services list –project

. - B. Run gcloud init to set the current project to my-project, and then run gcloud services list –available.

- C. Run gcloud info to view the account value, and then run gcloud services list –account

. - D. Run gcloud projects describe

to verify the project value, and then run gcloud services list --available.

Answer

Correct answer: A

- A. Run gcloud projects list to get the project ID, and then run gcloud services list –project

- You are building a new version of an application hosted in an App Engine environment. You want to test the new version with 1% of users before you completely switch your application over to the new version. What should you do?

- A. Deploy a new version of your application in Google Kubernetes Engine instead of App Engine and then use GCP Console to split traffic.

- B. Deploy a new version of your application in a Compute Engine instance instead of App Engine and then use GCP Console to split traffic.

- C. Deploy a new version as a separate app in App Engine. Then configure App Engine using GCP Console to split traffic between the two apps.

- D. Deploy a new version of your application in App Engine. Then go to App Engine settings in GCP Console and split traffic between the current version and newly deployed versions accordingly.

Answer

Correct answer: D

- You need to provide a cost estimate for a Kubernetes cluster using the GCP pricing calculator for Kubernetes. Your workload requires high IOPs, and you will also be using disk snapshots. You start by entering the number of nodes, average hours, and average days. What should you do next?

- A. Fill in local SSD. Fill in persistent disk storage and snapshot storage.

- B. Fill in local SSD. Add estimated cost for cluster management.

- C. Select Add GPUs. Fill in persistent disk storage and snapshot storage.

- D. Select Add GPUs. Add estimated cost for cluster management.

Answer

Correct answer: A

- You are using Google Kubernetes Engine with autoscaling enabled to host a new application. You want to expose this new application to the public, using HTTPS on a public IP address. What should you do?

- A. Create a Kubernetes Service of type NodePort for your application, and a Kubernetes Ingress to expose this Service via a Cloud Load Balancer.

- B. Create a Kubernetes Service of type ClusterIP for your application. Configure the public DNS name of your application using the IP of this Service.

- C. Create a Kubernetes Service of type NodePort to expose the application on port 443 of each node of the Kubernetes cluster. Configure the public DNS name of your application with the IP of every node of the cluster to achieve load-balancing.

- D. Create a HAProxy pod in the cluster to load-balance the traffic to all the pods of the application. Forward the public traffic to HAProxy with an iptable rule. Configure the DNS name of your application using the public IP of the node HAProxy is running on.

Answer

Correct answer: A

- You need to enable traffic between multiple groups of Compute Engine instances that are currently running two different GCP projects. Each group of Compute Engine instances is running in its own VPC. What should you do?

- A. Verify that both projects are in a GCP Organization. Create a new VPC and add all instances.

- B. Verify that both projects are in a GCP Organization. Share the VPC from one project and request that the Compute Engine instances in the other project use this shared VPC.

- C. Verify that you are the Project Administrator of both projects. Create two new VPCs and add all instances.

- D. Verify that you are the Project Administrator of both projects. Create a new VPC and add all instances.

Answer

Correct answer: B

- You want to add a new auditor to a Google Cloud Platform project. The auditor should be allowed to read, but not modify, all project items. How should you configure the auditor’s permissions?

- A. Create a custom role with view-only project permissions. Add the user’s account to the custom role.

- B. Create a custom role with view-only service permissions. Add the user’s account to the custom role.

- C. Select the built-in IAM project Viewer role. Add the user’s account to this role.

- D. Select the built-in IAM service Viewer role. Add the user’s account to this role.

Answer

Correct answer: C

- You are operating a Google Kubernetes Engine (GKE) cluster for your company where different teams can run non-production workloads. Your Machine Learning (ML) team needs access to Nvidia Tesla P100 GPUs to train their models. You want to minimize effort and cost. What should you do?

- A. Ask your ML team to add the accelerator: gpu annotation to their pod specification.

- B. Recreate all the nodes of the GKE cluster to enable GPUs on all of them.

- C. Create your own Kubernetes cluster on top of Compute Engine with nodes that have GPUs. Dedicate this cluster to your ML team.

- D. Add a new, GPU-enabled, node pool to the GKE cluster. Ask your ML team to add the cloud.google.com/gke -accelerator: nvidia-tesla-p100 nodeSelector to their pod specification.

Answer

Correct answer: D

- Your VMs are running in a subnet that has a subnet mask of 255.255.255.240. The current subnet has no more free IP addresses and you require an additional 10 IP addresses for new VMs. The existing and new VMs should all be able to reach each other without additional routes. What should you do?

- A. Use gcloud to expand the IP range of the current subnet.

- B. Delete the subnet, and recreate it using a wider range of IP addresses.

- C. Create a new project. Use Shared VPC to share the current network with the new project.

- D. Create a new subnet with the same starting IP but a wider range to overwrite the current subnet.

Answer

Correct answer: A

- Your organization uses G Suite for communication and collaboration. All users in your organization have a G Suite account. You want to grant some G Suite users access to your Cloud Platform project. What should you do?

- A. Enable Cloud Identity in the GCP Console for your domain.

- B. Grant them the required IAM roles using their G Suite email address.

- C. Create a CSV sheet with all users’ email addresses. Use the gcloud command line tool to convert them into Google Cloud Platform accounts.

- D. In the G Suite console, add the users to a special group called cloud-console-users@yourdomain.com. Rely on the default behavior of the Cloud Platform to grant users access if they are members of this group.

Answer

Correct answer: B

- You have a Google Cloud Platform account with access to both production and development projects. You need to create an automated process to list all compute instances in development and production projects on a daily basis. What should you do?

- A. Create two configurations using gcloud config. Write a script that sets configurations as active, individually. For each configuration, use gcloud compute instances list to get a list of compute resources.

- B. Create two configurations using gsutil config. Write a script that sets configurations as active, individually. For each configuration, use gsutil compute instances list to get a list of compute resources.

- C. Go to Cloud Shell and export this information to Cloud Storage on a daily basis.

- D. Go to GCP Console and export this information to Cloud SQL on a daily basis.

Answer

Correct answer: A

- You have a large 5-TB AVRO file stored in a Cloud Storage bucket. Your analysts are proficient only in SQL and need access to the data stored in this file. You want to find a cost-effective way to complete their request as soon as possible. What should you do?

- A. Load data in Cloud Datastore and run a SQL query against it.

- B. Create a BigQuery table and load data in BigQuery. Run a SQL query on this table and drop this table after you complete your request.

- C. Create external tables in BigQuery that point to Cloud Storage buckets and run a SQL query on these external tables to complete your request.

- D. Create a Hadoop cluster and copy the AVRO file to NDFS by compressing it. Load the file in a hive table and provide access to your analysts so that they can run SQL queries.

Answer

Correct answer: C

- You need to verify that a Google Cloud Platform service account was created at a particular time. What should you do?

- A. Filter the Activity log to view the Configuration category. Filter the Resource type to Service Account.

- B. Filter the Activity log to view the Configuration category. Filter the Resource type to Google Project.

- C. Filter the Activity log to view the Data Access category. Filter the Resource type to Service Account.

- D. Filter the Activity log to view the Data Access category. Filter the Resource type to Google Project.

Answer

Correct answer: A

- You deployed an LDAP server on Compute Engine that is reachable via TLS through port 636 using UDP. You want to make sure it is reachable by clients over that port. What should you do?

- A. Add the network tag allow-udp-636 to the VM instance running the LDAP server.

- B. Create a route called allow-udp-636 and set the next hop to be the VM instance running the LDAP server.

- C. Add a network tag of your choice to the instance. Create a firewall rule to allow ingress on UDP port 636 for that network tag.

- D. Add a network tag of your choice to the instance running the LDAP server. Create a firewall rule to allow egress on UDP port 636 for that network tag.

Answer

Correct answer: C

- You need to set a budget alert for use of Compute Engineer services on one of the three Google Cloud Platform projects that you manage. All three projects are linked to a single billing account. What should you do?

- A. Verify that you are the project billing administrator. Select the associated billing account and create a budget and alert for the appropriate project.

- B. Verify that you are the project billing administrator. Select the associated billing account and create a budget and a custom alert.

- C. Verify that you are the project administrator. Select the associated billing account and create a budget for the appropriate project.

- D. Verify that you are project administrator. Select the associated billing account and create a budget and a custom alert.

Answer

Correct answer: A

- You are migrating a production-critical on-premises application that requires 96 vCPUs to perform its task. You want to make sure the application runs in a similar environment on GCP. What should you do?

- A. When creating the VM, use machine type n1-standard-96.

- B. When creating the VM, use Intel Skylake as the CPU platform.

- C. Create the VM using Compute Engine default settings. Use gcloud to modify the running instance to have 96 vCPUs.

- D. Start the VM using Compute Engine default settings, and adjust as you go based on Rightsizing Recommendations.

Answer

Correct answer: A

- You want to configure a solution for archiving data in a Cloud Storage bucket. The solution must be cost-effective. Data with multiple versions should be archived after 30 days. Previous versions are accessed once a month for reporting. This archive data is also occasionally updated at month-end. What should you do?

- A. Add a bucket lifecycle rule that archives data with newer versions after 30 days to Coldline Storage.

- B. Add a bucket lifecycle rule that archives data with newer versions after 30 days to Nearline Storage.

- C. Add a bucket lifecycle rule that archives data from regional storage after 30 days to Coldline Storage.

- D. Add a bucket lifecycle rule that archives data from regional storage after 30 days to Nearline Storage.

Answer

Correct answer: B

- Your company’s infrastructure is on-premises, but all machines are running at maximum capacity. You want to burst to Google Cloud. The workloads on Google Cloud must be able to directly communicate to the workloads on-premises using a private IP range. What should you do?

- A. In Google Cloud, configure the VPC as a host for Shared VP.

- B. In Google Cloud, configure the VPC for VPC Network Peering.

- C. Create bastion hosts both in your on-premises environment and on Google Cloud. Configure both as proxy servers using their public IP addresses.

- D. Set up Cloud VPN between the infrastructure on-premises and Google Cloud.

Answer

Correct answer: D

- You want to select and configure a solution for storing and archiving data on Google Cloud Platform. You need to support compliance objectives for data from one geographic location. This data is archived after 30 days and needs to be accessed annually. What should you do?

- A. Select Multi-Regional Storage. Add a bucket lifecycle rule that archives data after 30 days to Coldline Storage.

- B. Select Multi-Regional Storage. Add a bucket lifecycle rule that archives data after 30 days to Nearline Storage.

- C. Select Regional Storage. Add a bucket lifecycle rule that archives data after 30 days to Nearline Storage.

- D. Select Regional Storage. Add a bucket lifecycle rule that archives data after 30 days to Coldline Storage.

Answer

Correct answer: D

- Your company uses BigQuery for data warehousing. Over time, many different business units in your company have created 1000+ datasets across hundreds of projects. Your CIO wants you to examine all datasets to find tables that contain an employee_ssn column. You want to minimize effort in performing this task. What should you do?

- A. Go to Data Catalog and search for employee_ssn in the search box.

- B. Write a shell script that uses the bq command line tool to loop through all the projects in your organization.

- C. Write a script that loops through all the projects in your organization and runs a query on INFORMATION_SCHEMA.COLUMNS view to find the employee_ssn column.

- D. Write a Cloud Dataflow job that loops through all the projects in your organization and runs a query on INFORMATION_SCHEMA.COLUMNS view to find employee_ssn column.

Answer

Correct answer: A

-

You create a Deployment with 2 replicas in a Google Kubernetes Engine cluster that has a single preemptible node pool. After a few minutes, you use kubectl to examine the status of your Pod and observe that one of them is still in Pending status. What is the most likely cause?

- A. The pending Pod’s resource requests are too large to fit on a single node of the cluster.

- B. Too many Pods are already running in the cluster, and there are not enough resources left to schedule the pending Pod.

- C. The node pool is configured with a service account that does not have permission to pull the container image used by the pending Pod.

- D. The pending Pod was originally scheduled on a node that has been preempted between the creation of the Deployment and your verification of the Pods’ status. It is currently being rescheduled on a new node.

Answer

Correct answer: D

- You want to find out when users were added to Cloud Spanner Identity Access Management (IAM) roles on your Google Cloud Platform (GCP) project. What should you do in the GCP Console?

- A. Open the Cloud Spanner console to review configurations.

- B. Open the IAM & admin console to review IAM policies for Cloud Spanner roles.

- C. Go to the Stackdriver Monitoring console and review information for Cloud Spanner.

- D. Go to the Stackdriver Logging console, review admin activity logs, and filter them for Cloud Spanner IAM roles.

Answer

Correct answer: D

- Your company implemented BigQuery as an enterprise data warehouse. Users from multiple business units run queries on this data warehouse. However, you notice that query costs for BigQuery are very high, and you need to control costs. Which two methods should you use? (Choose two.)

- A. Split the users from business units to multiple projects.

- B. Apply a user - or project-level custom query quota for BigQuery data warehouse.

- C. Create separate copies of your BigQuery data warehouse for each business unit.

- D. Split your BigQuery data warehouse into multiple data warehouses for each business unit.

- E. Change your BigQuery query model from on-demand to flat rate. Apply the appropriate number of slots to each Project.

Answer

Correct answer: B, E

- You are building a product on top of Google Kubernetes Engine (GKE). You have a single GKE cluster. For each of your customers, a Pod is running in that cluster, and your customers can run arbitrary code inside their Pod. You want to maximize the isolation between your customers’ Pods. What should you do?

- A. Use Binary Authorization and whitelist only the container images used by your customers’ Pods.

- B. Use the Container Analysis API to detect vulnerabilities in the containers used by your customers’ Pods.

- C. Create a GKE node pool with a sandbox type configured to gvisor. Add the parameter runtimeClassName: gvisor to the specification of your customers’ Pods.

- D. Use the cos_containerd image for your GKE nodes. Add a nodeSelector with the value cloud.google.com/gke-os-distribution: cos_containerd to the specification of your customers’ Pods.

Answer

Correct answer: C

-

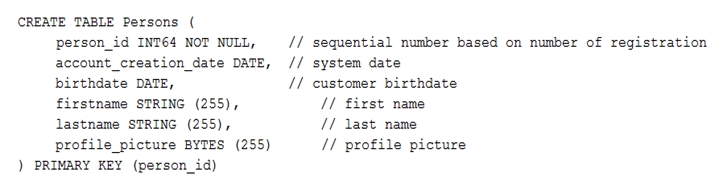

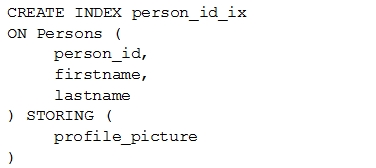

Your customer has implemented a solution that uses Cloud Spanner and notices some read latency-related performance issues on one table. This table is accessed only by their users using a primary key. The table schema is shown below. You want to resolve the issue. What should you do?

- A. Remove the profile_picture field from the table.

- B. Add a secondary index on the person_id column.

- C. Change the primary key to not have monotonically increasing values.

- D. Create a secondary index using the following Data Definition Language (DDL):

Answer

Correct answer: C

- Your finance team wants to view the billing report for your projects. You want to make sure that the finance team does not get additional permissions to the project. What should you do?

- A. Add the group for the finance team to roles/billing user role.

- B. Add the group for the finance team to roles/billing admin role.

- C. Add the group for the finance team to roles/billing viewer role.

- D. Add the group for the finance team to roles/billing project/Manager role.

Answer

Correct answer: C

- Your organization has strict requirements to control access to Google Cloud projects. You need to enable your Site Reliability Engineers (SREs) to approve requests from the Google Cloud support team when an SRE opens a support case. You want to follow Google-recommended practices. What should you do?

- A. Add your SREs to roles/iam.roleAdmin role.

- B. Add your SREs to roles/accessapproval.approver role.

- C. Add your SREs to a group and then add this group to roles/iam.roleAdmin.role.

- D. Add your SREs to a group and then add this group to roles/accessapproval.approver role.

Answer

Correct answer: D

Please feel free to contact us if any information is inaccurate or if any answers need correction.